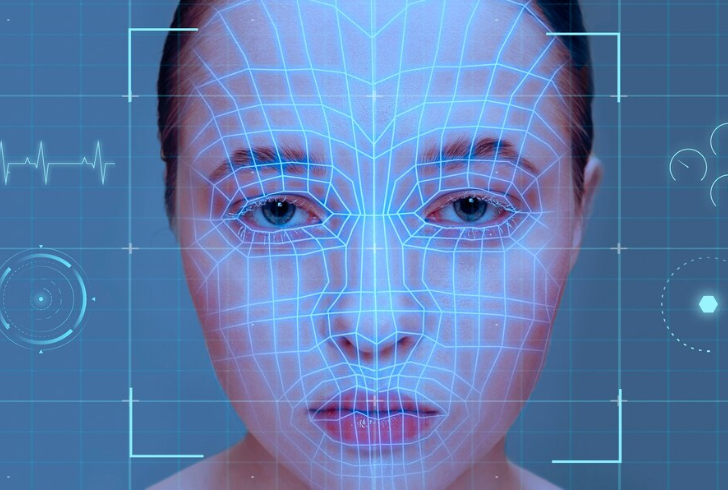

Facial recognition technology, hailed for its promise of convenience and accuracy, has found itself at the center of scrutiny due to its increasing use in law enforcement. Despite widespread evidence of flaws and biases, police departments across the United States continue to rely on these systems, often leading to serious consequences like wrongful arrests.

This raises pressing questions about accountability and the ethical implications of using such technology.

The Flaws of Facial Recognition Technology

Multiple studies have consistently highlighted significant shortcomings in facial recognition systems. A comprehensive 2019 NIST study revealed error rates that disproportionately affect Black and Asian individuals, who are up to 100 times more likely to be misidentified than white men. These inaccuracies stem from biases within both the algorithms and the datasets they use.

For instance, mugshot databases often contain a higher percentage of Black individuals due to disparities in arrest rates for minor offenses. These datasets, coupled with surveillance cameras disproportionately installed in predominantly Black and brown neighborhoods, amplify the likelihood of misidentification. Among eight documented cases of wrongful arrests cited in a recent investigation, seven involved Black individuals—underscoring the technology’s racial bias.

Law Enforcement’s Overreliance on Faulty Systems

Rather than treating facial recognition as a supplemental tool, some police officers rely on it as definitive evidence, often neglecting standard investigative practices. Reports indicate that officers labeled AI-generated matches as “100% certain” without corroborating evidence. This overconfidence led to neglecting alibis, ignoring physical discrepancies, and even dismissing DNA evidence pointing to other suspects.

In some cases, witnesses were improperly guided by AI results to confirm identifications, further undermining the integrity of the process. Such misuse of technology highlights a broader issue—law enforcement’s failure to implement proper standards and oversight for the use of facial recognition.

Lack of Oversight and Accountability

One of the most concerning aspects of this issue is the absence of robust regulations governing facial recognition in policing. While some cities and states initially banned the technology, several—including Virginia and New Orleans—have since reversed these bans. In other instances, police departments bypass restrictions by outsourcing facial recognition searches to other jurisdictions.

Moreover, the lack of transparency exacerbates the problem. Prosecutors often treat facial recognition as an investigative tool rather than evidence, meaning defendants may never know it played a role in their arrest. Without mandatory reporting or strict enforcement of standards, misuse of this technology is likely to continue unchecked.

Expanding AI in Policing

Facial recognition is only one of several AI technologies being adopted by law enforcement. Tools capable of analyzing speech patterns, connecting surveillance feeds, and aggregating data from various sources are becoming more common. Without clear regulations, these systems risk being misused in ways similar to facial recognition.

Privacy and civil rights advocates argue for stringent controls, such as mandatory disclosure when facial recognition is used and ensuring arrests are supported by additional evidence. As AI continues to expand into new areas of policing, the need for accountability and ethical standards becomes more urgent than ever.

A Call for Reform

The growing reliance on facial recognition technology in law enforcement highlights the urgent need for reform. Addressing its inherent flaws, ensuring transparency, and establishing strict usage standards are critical steps toward preventing further harm.

Until such measures are implemented, the risks of wrongful arrests and systemic biases will persist, undermining public trust in both technology and the institutions that use it.